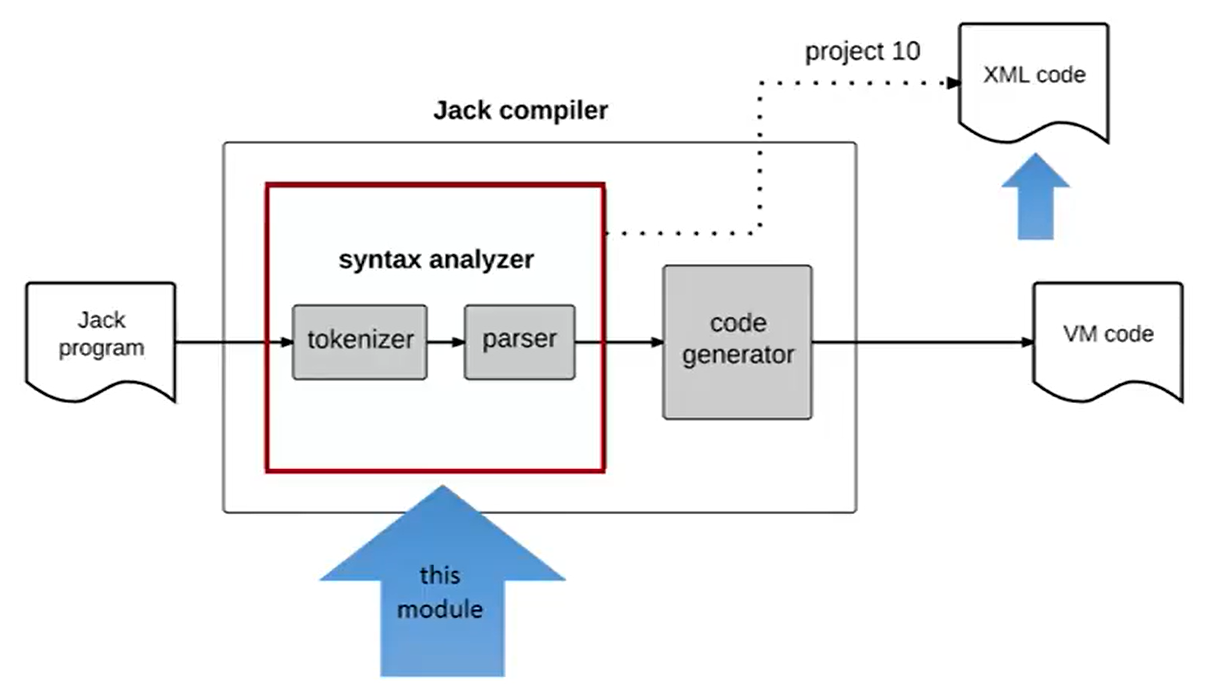

Unit 10.1 Compiler I: Roadmap

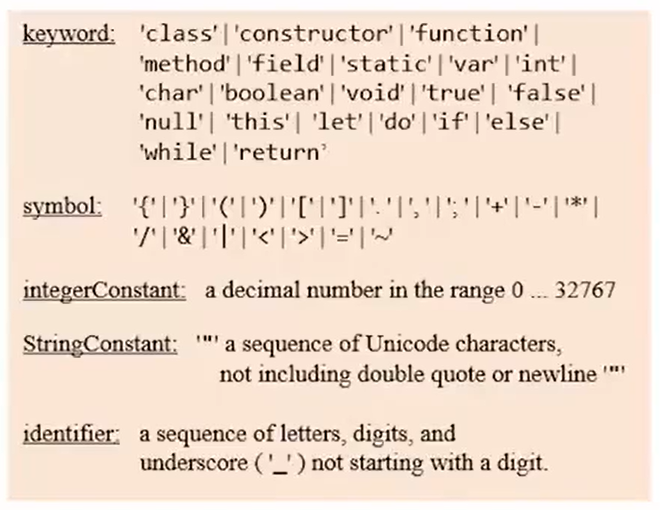

Unit 10.2 Lexical Analysis

Tokenizing(first approximation)

Tokenizing = grouping characters into tokens.

A token is a string of characters that has a meaning.

A programming language specification must document(among other things) it’s allowable tokens.

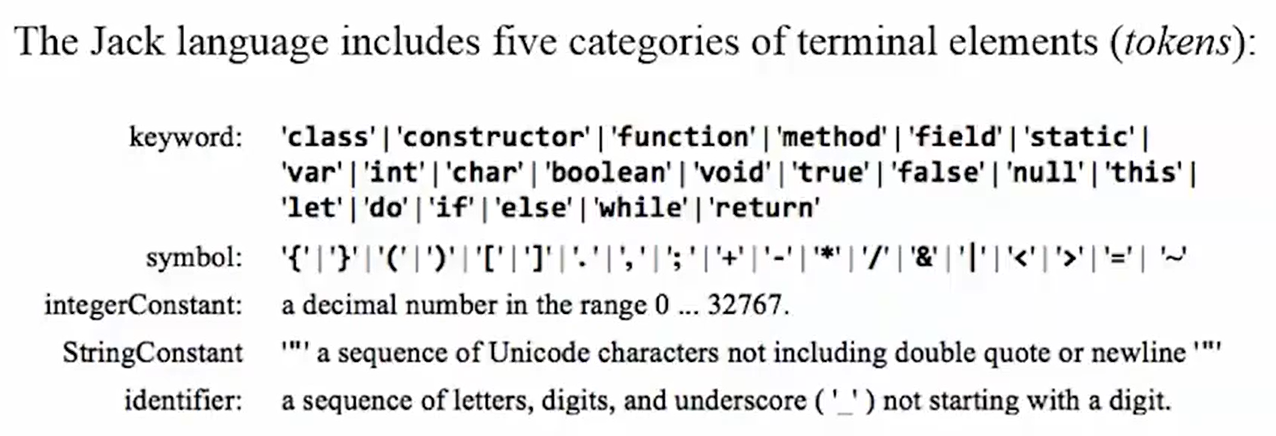

Jack tokens

- keywords

- symbols

- integers

- strings

- identifiers

Tokenizer:

- Handles the compiler’s input

- Allows advancing the input

- Supplies the current token’s value and type

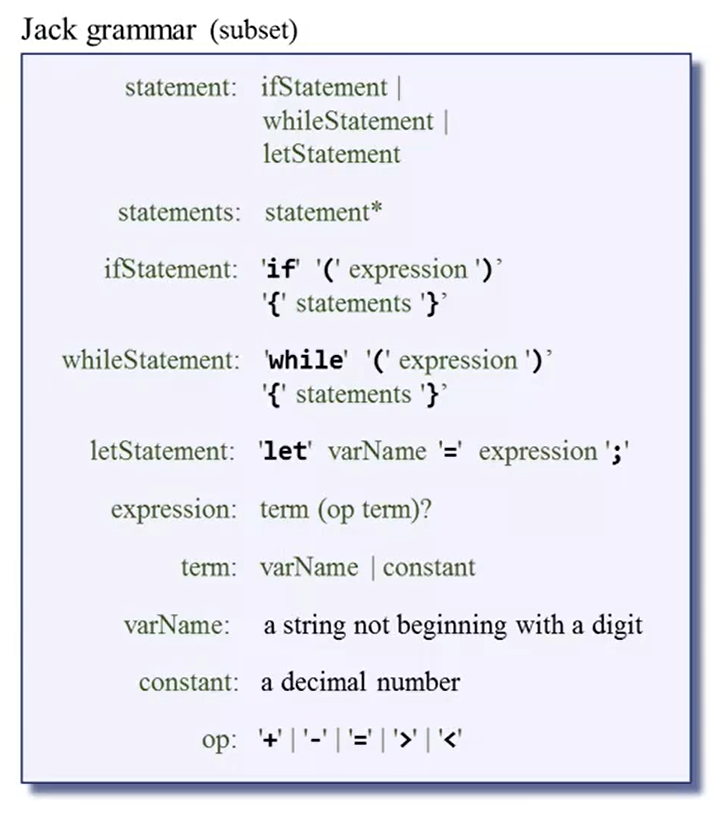

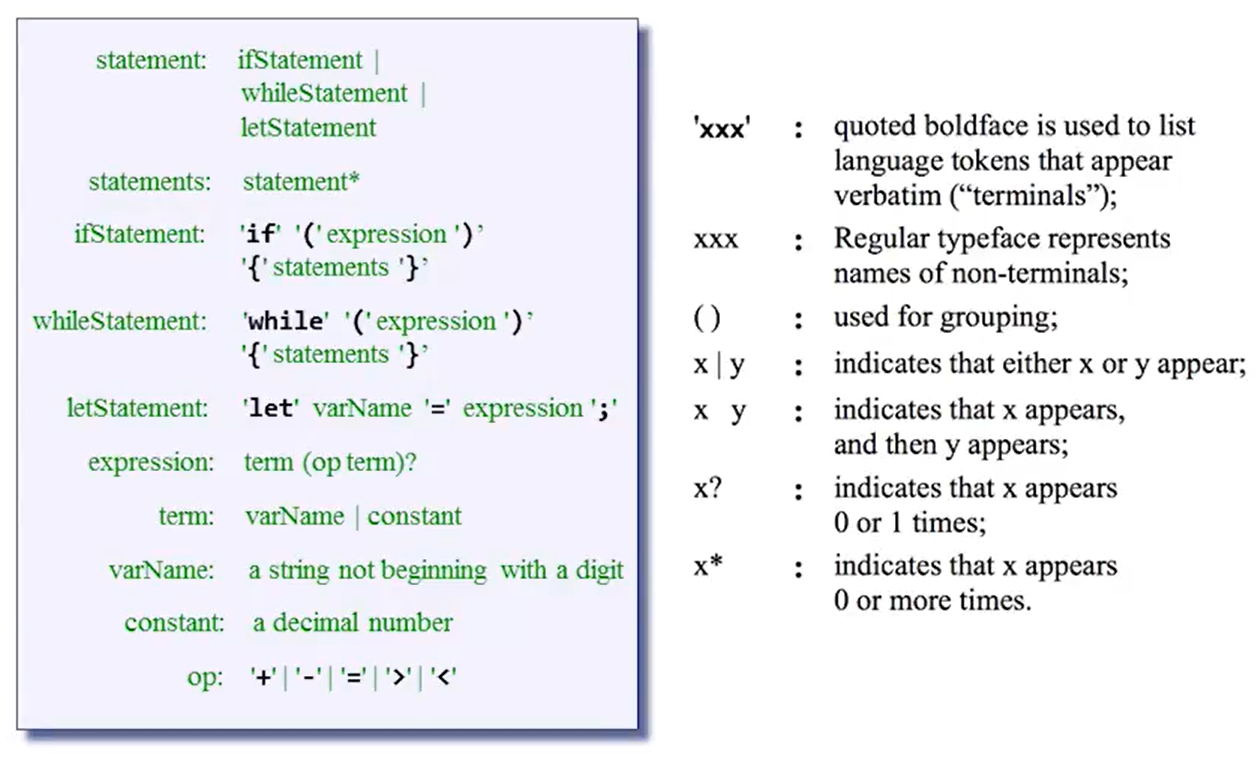

Unit 10.3 Grammars

- A grammar is a set of rules, describing how tokens can be combined to create valid language constructs

- Each rule consists of a left-hand side, listing a template’s name, and a right-hand side, describing how the template is composed.

Parsing:

- Determining if a given input conforms to a grammar

- In the process, uncovering the grammatical structure of the given input.

Unit 10.4 Parse Trees

Parse tree is a data structure. If you have learned the course of data structure, it will be easy to understand parse tree.

Unit 10.5 Parser Logic

A. Parsing logic

- Follow the rule, and parse the input accordingly

- If get a non-terminal rule xxx, call compilexxx

- Do this recursively

B. Init

- Advance the tokenizer

- Call compileWhileStatement(see the video for more details🔍)

C. Parser design

- A set of compilexxx methods, one for each(non-trivial) rule xxx

- The handing of some rules is embedded in other rules

- The code of each compilexxx method follows the rule xxx

- Each compilexxx method is responsible for advancing and handing it’s own part of the input

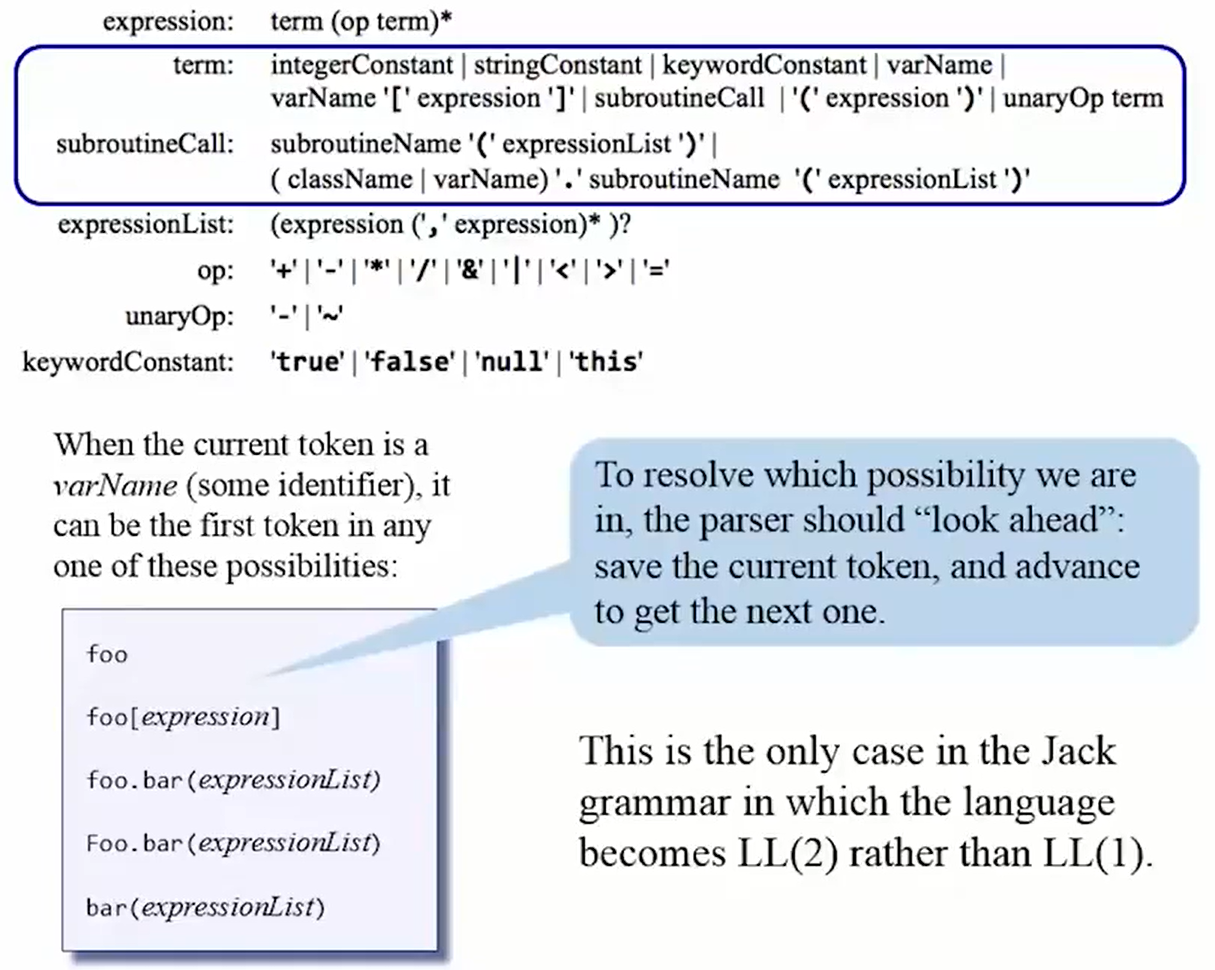

D. Some observations about grammars and parsing

- LL grammar: can be parsed by a recursive descent parser without backtracking

- LL(k) parser: a parser that needs to look ahead at most k tokens in order to determine which rule is applicable

- The grammar that we saw so far is LL(1)(That means easier to implepement.🎉)

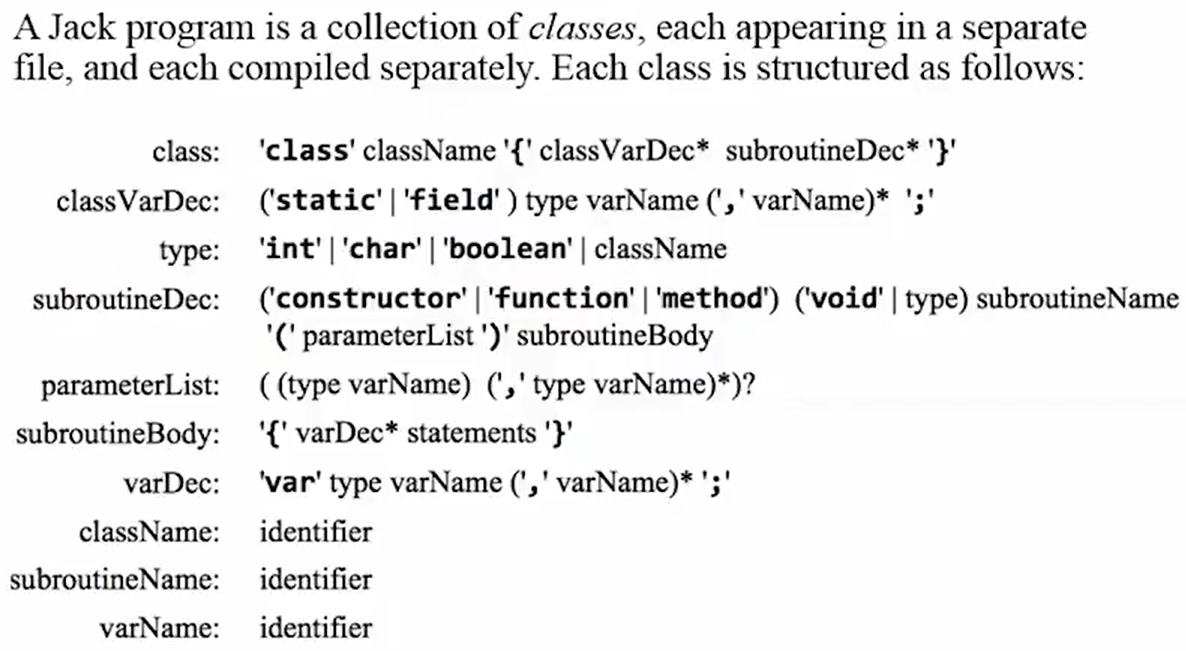

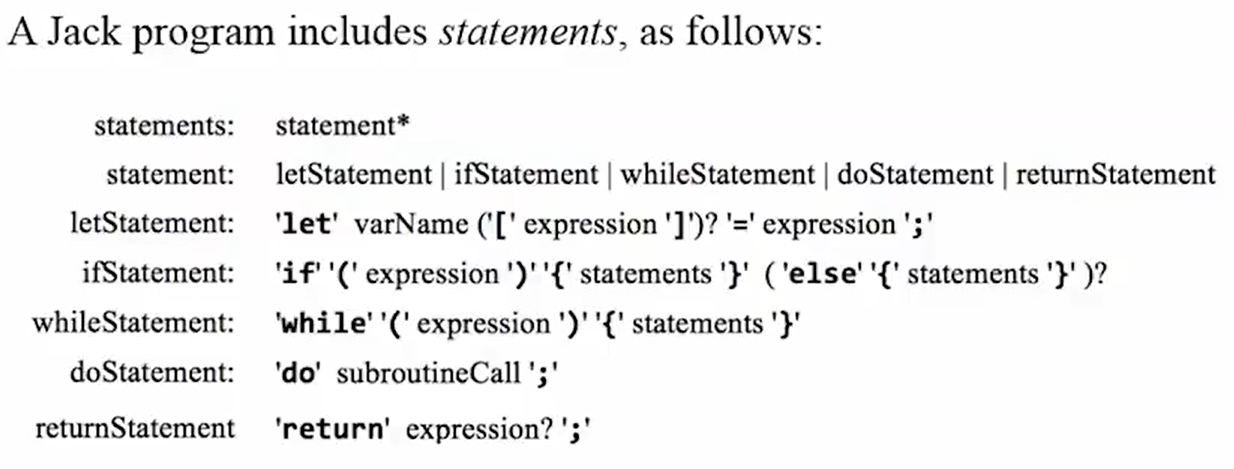

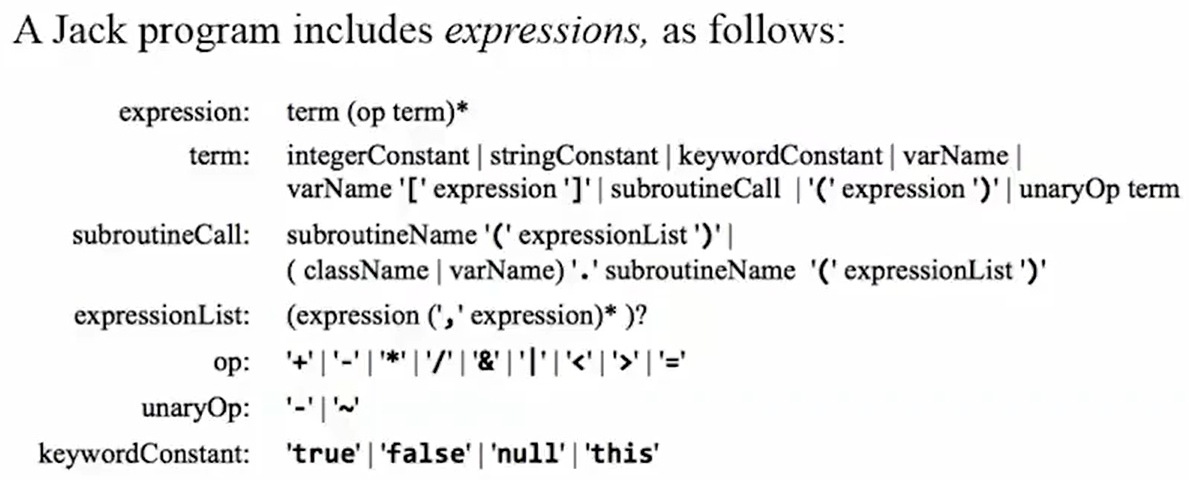

Unit 10.6 The Jack Grammar

lexical elements

program structure

statements

expressions

Unit 10.7 The Jack Analyzer

1 | parser |

Unit 10.8 The Jack Analyzer: Proposed Implementations

Implementation plan: JackTokenizer -> CompilationEngine -> JackAnalyzer(top-most module)

The JackAnalyzer:

- Uses the services of a JackTokenizer

- Written according to the Jack grammar

- The top-most module

- Input: a single fileName.jack, or a directory containing 0 or more such files

- For each file, goes through the following logic

- Creates a JackTokenizer from fileName.jack

- Creates an output file named fileName.xml and prepares it for writing

- Creates and uses a CompilationEngine to compile the input JackTokenizer into the output file.

The JackTokenizer:

- Handle the compiler’s input

- Ignoring white space

- Advancing the input, one token at a time

- Getting the value and type of the current token

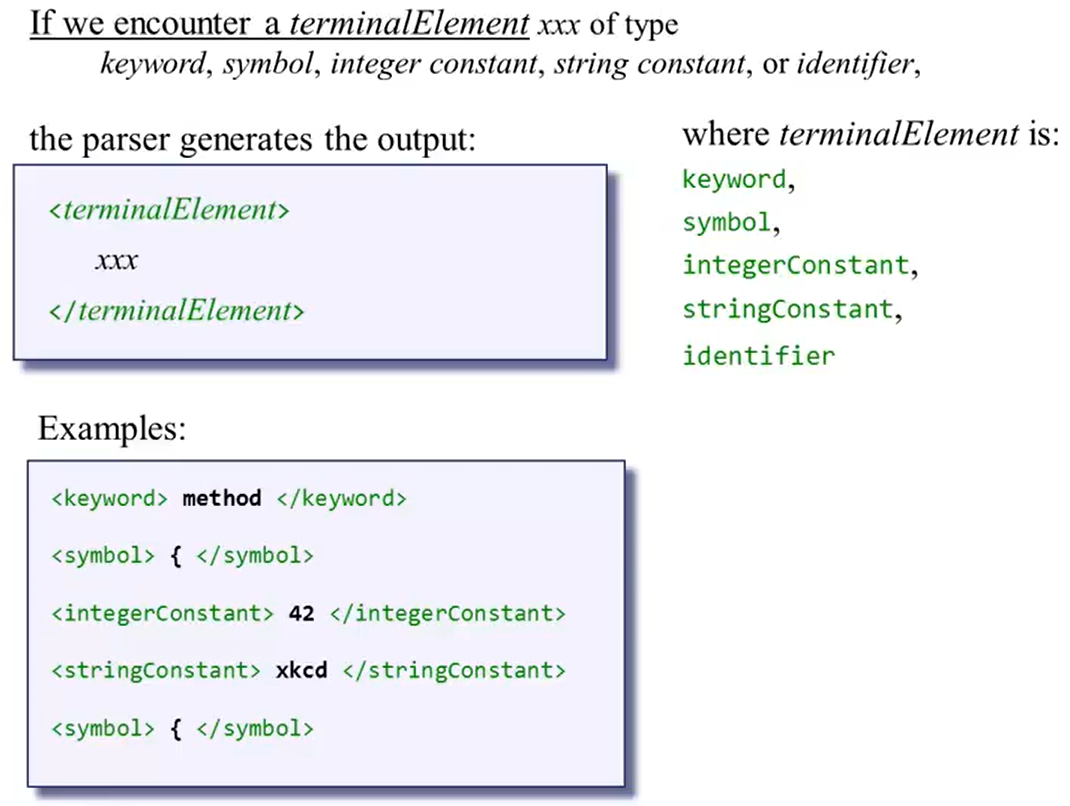

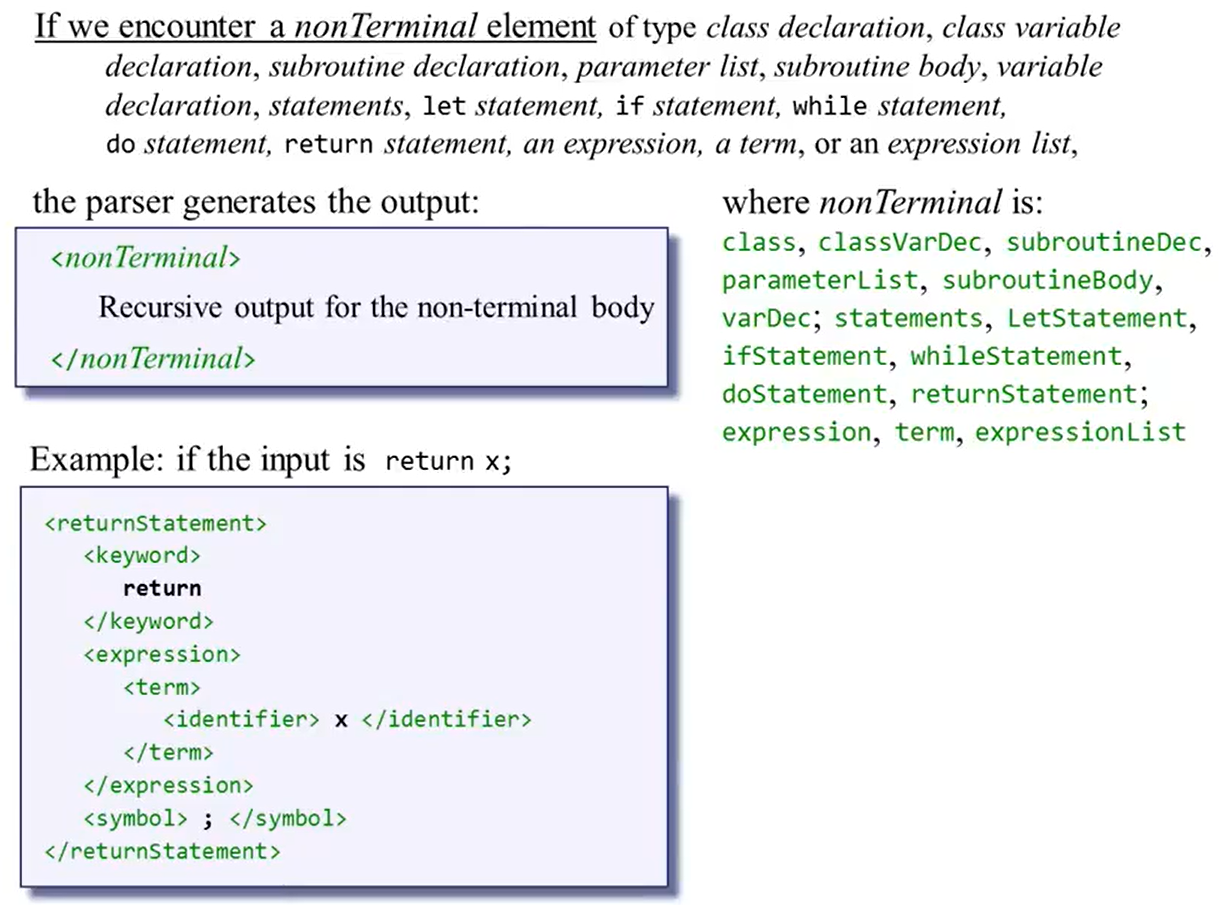

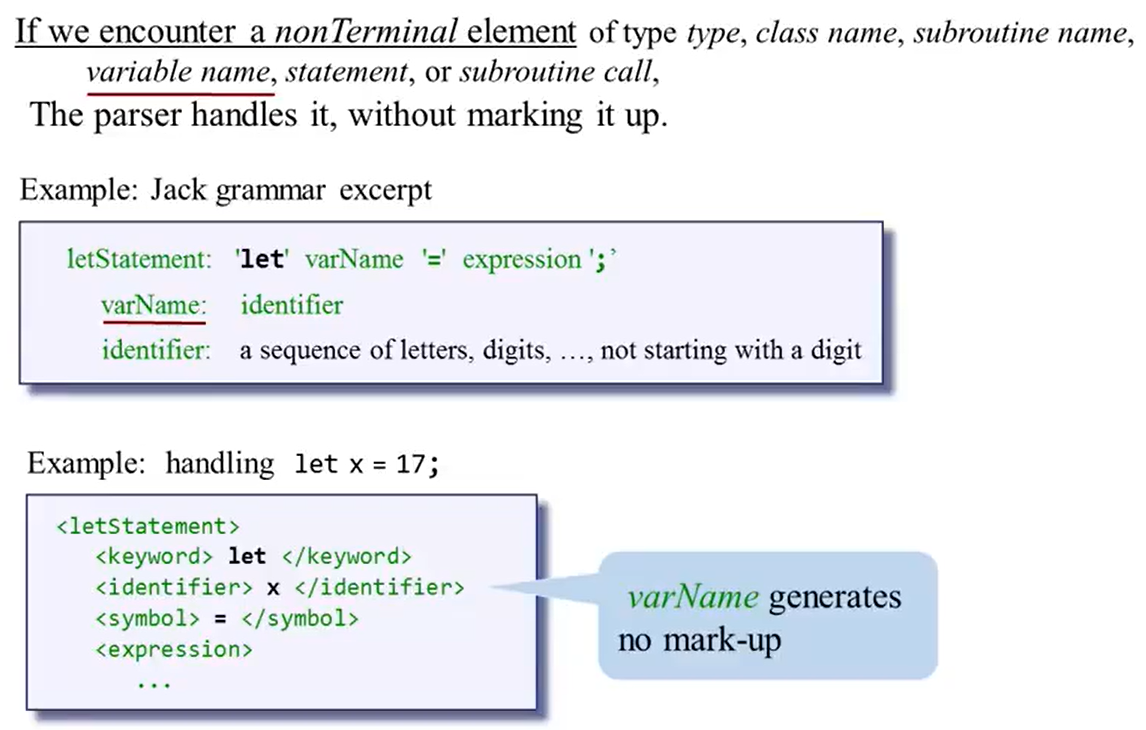

The CompilationEngine design:

- Gets its input from a JackTokenizer, and emits its output to an output file

- The output is generates by a series of compilexxx routines, one for (almost) every non-terminal rule xxx in the grammar

- Each compilexxx routine is responsible for handling all the tokens that make up xxx, advancing the tokenizer exactly beyond these tokens, and outputing the parsing of xxx

Unit 10.9 Project: Building a Syntax Analyzer

A syntax Analyzer has three modules: JackAnalyzer, JackTokenizer and CompilationEngine. These modules are designed to handle the language’s syntax.

Here is the codes(top-down):1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

using namespace std;

int main(int argc, char** argv) {

if(argc != 2) {

cout << "Input error!\nUsage: .\\SyntaxAnalyzer.exe [filename or filepath]" << endl;

return 0;

}

try {

string path(argv[1]);

JackAnalyzer jack(path);

jack.doAnalyzing();

} catch(exception& e) {

cerr << e.what() << endl;

}

return 0;

}

✅ main.cpp: Start Analyzing and catch the exception for debugging.

1 |

|

1 |

|

✅ JackAnalyzer: Parse filepath for tokenizing and compiling.

1 |

|

1 |

|

✅ JackTokenizer: Remove the comments from source file and tokenize the source code.

1 |

|

1 |

|

✅ CompilationEngine: use token parse code.

Unit 10.10 Perspective

- Compilers don’t only translate programs, they also find and report errors. Are we going to handle errors in our Jack compiler?

- We decided to completely sidestep in this module. It’s an optional feature for you.

- Can we use the techniques that we learned in this module to develop parsers for other programming languages?

- Parsers are quite useful not only for parsing programs but also for parsing any syntax-based text which happens a lot in the careers of many application programmers. But it’s not necessarily for language like Java and C++.

- Why didn’t we use lex and yacc?

- lex and yacc are two software tools that come from the world of Unix. Lex stands for Lexical Analyzer which actually refers to a tool which is capable of generating tokenizing code automatically. Yacc stands for the whimsical compiler compiler which actually refers to a tool which is capable of generating parsing code automatically. They generate syntax analysis code that can be customized and developed into a full scale syntax analysis tool. But this course is about doing everything from scratch from the ground up in your bare hands in order to explore and understand. Using black box tools like lex and yacc go against the Nand2tetris spirit.✨